As anyone who's heard of the 12 factor app knows, logs are best outputted to the standard output of the process and have the environment handle the logs accordingly. A while back I worked at a company where we collected logs with the EFK stack. We had quite a bit of logs and ingested daily and tracking down issues in Kibana using regular expressions became unwieldy. Structured logging (read json lines, where every line is a json object) was the answer. It would allow us to more easily find all of the logs for a single transaction or account and our EFK stack could parse out-of-the-box.

There was a previous attempt at structured logs before I joined the team that failed to be merged. It was too invasive, the diff too big, it never worked correctly and it required too much work to carry the diff forward and eventually the branch was dropped.

Another complaint I heard from the developers about that previous attempt was that it made reading the logs when developing locally (reading the logs from the Docker container) or for failing CI builds more difficult. Indeed, it was not human-readable.

Others at the company (with experience log4j and enterprise software) wanted to output all of the logs to 2 files with different formats. I saw this as a mistake. It would make handling logs inside of containers more difficult. It would require dealing with log rotation.

Another goal for me was to be able gradually convert components to the new log handling so we won't end up with a huge diff that's never merged again.

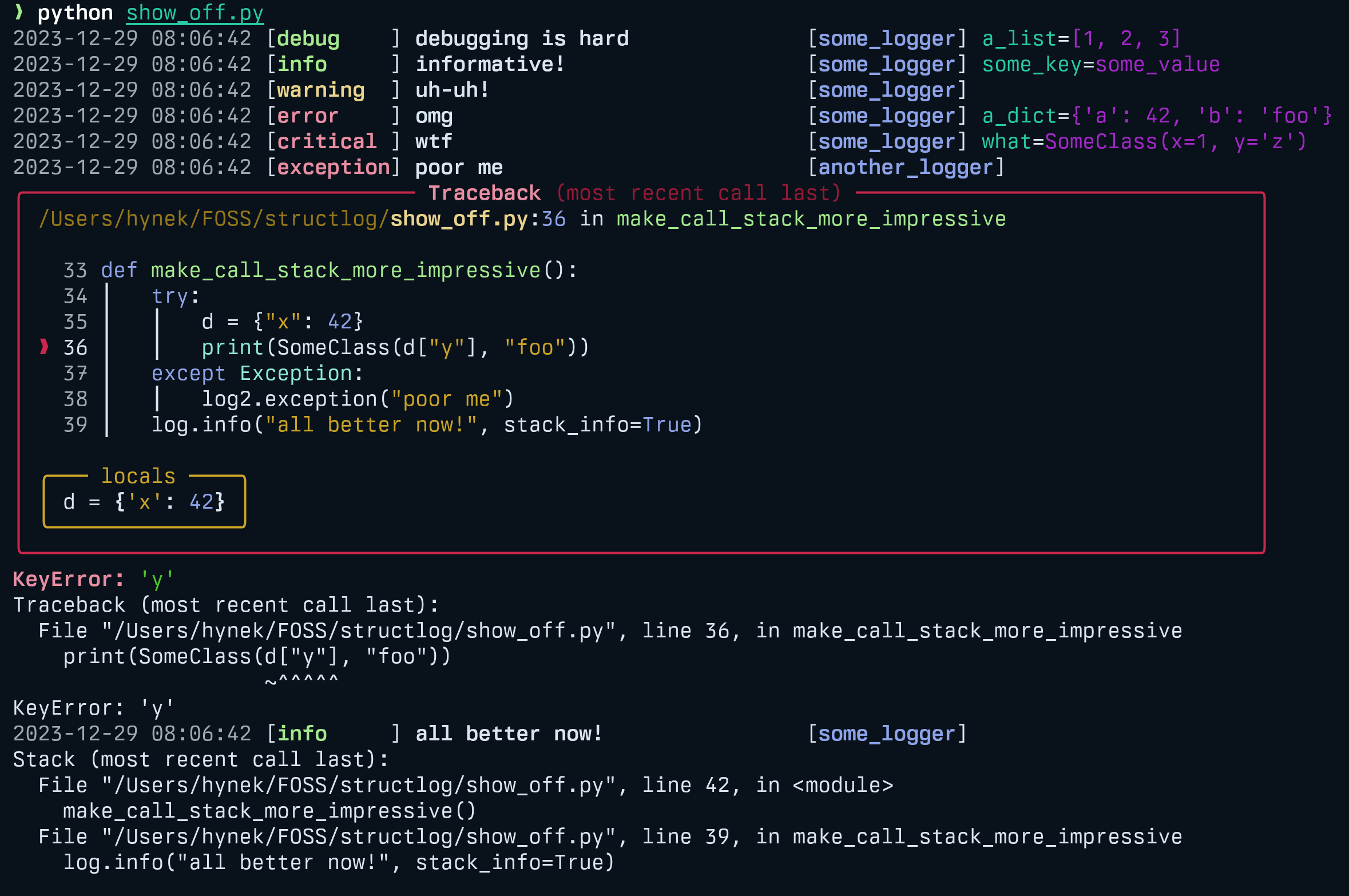

I went with the structlog package and after delving in to it, I created a module that only requires importing to use it. The module configures the log format (human or json) and level according to environment variables but it doesn't require any change to the logging calls. The structlog package also comes with a great looking console renderer where ever control character are valid. Here's how it looks:

And here's the module:

import logging import logging.config import structlog import structlog.stdlib import structlog.processors import structlog.dev import os emptyLogRecord = logging.makeLogRecord({}) def add_extra_params(logger, log_method, event_dict): record = event_dict['_record'] for (k, v) in list(record.__dict__.items()): if k != 'stack_info' and k not in emptyLogRecord.__dict__ and k not in event_dict: # noqa: E501 event_dict[k] = v return event_dict pre_chain = [ structlog.processors.StackInfoRenderer(), structlog.stdlib.PositionalArgumentsFormatter(), structlog.stdlib.add_log_level, structlog.stdlib.add_logger_name, structlog.processors.TimeStamper(fmt='%Y-%m-%d %H:%M.%S'), structlog.processors.format_exc_info, add_extra_params, ] processor = { 'human': structlog.dev.ConsoleRenderer(), 'json': structlog.processors.JSONRenderer(), } logFormat = os.environ.get('LOG_FORMAT', 'human') assert logFormat in processor try: logLevel = getattr(logging, os.environ.get('LOG_LEVEL', 'WARN').upper()) except AttributeError: logLevel = logging.WARN # The default log level. config = { 'version': 1, 'disable_existing_loggers': True, 'formatters': { 'json': { '()': structlog.stdlib.ProcessorFormatter, 'processor': processor['json'], 'foreign_pre_chain': pre_chain, 'format': '%(message)s', }, 'human': { '()': structlog.stdlib.ProcessorFormatter, 'processor': processor['human'], 'foreign_pre_chain': pre_chain, }, }, 'handlers': { 'default': { 'formatter': logFormat, 'class': 'logging.StreamHandler', }, }, 'loggers': { '': { 'handlers': ['default'], 'level': logLevel, 'propegate': True, }, }, } logging.config.dictConfig(config) structlog.configure( processors=[processor[logFormat]], context_class=dict, logger_factory=structlog.stdlib.LoggerFactory(), )

I called this module logging_config and if a module had imported it, then logs would be human readable by default and if the LOG_FORMAT environment variable was set to json (it would be in the staging and production environment where we had an EFK stack running and collecting logs) the output was in json format.

This was the first step and it could be merged without breaking existing components. However, most logging calls still had formatted strings which didn't expose all of the data in a structured format. We had to go module by module and change to have a single, static log message and pass all of the variables in the extra parameter. It would mean changing calls that looked like this:

logger.error(f"Failed to send message {message} to user {uid}.")

to something like this:

logger.error("Failed to send message.", extra={"message": message, "uid": uid})

We could add fields to the output easily, deal with missing parameters more easily, output the Flask request context more easily, etc. We could do this piecemeal, going through one module at a time and not have to keep a growing diff maintained. It would keep the human readable logs where needed and have easily searchable logs in Kibana where available. We kept to the 12 factor app methodology and the benefits of it. It required little change to our setup and deployment. It had noticeable benefits out of gate which made the Dev team pitch in with converting modules to the new logging calls convention.